How to code neural networks (Part 1)

Ever seen a robot performing tasks on its own? if yes, then you probably

would have asked these questions:

- How was it made to think like humans?

- How it was programmed to perform its tasks on its own?

In this 2-part article, I will try to answer both of these. We will also

make our own neural network!

Let's start with the first one "how computers think?". if you want to jump

to directly coding, click this

link

to jump to part 2.

How do computers think and learn?

Before we answer the above question we must understand how the human brain

works. The human brain is a big neural network. A neural network is a

network or circuit of neurons. Neurons are the fundamental units of the

brain. Neurons receive signals from previous neurons and in turn, pass

signals on to other neurons.

Almost all computational systems are made inspired by biological neural

networks. Computers learn through Artificial Neural Networks(ANNs). It is made by

mimicking the human brain. ANNs are comprised of node layers. There is an

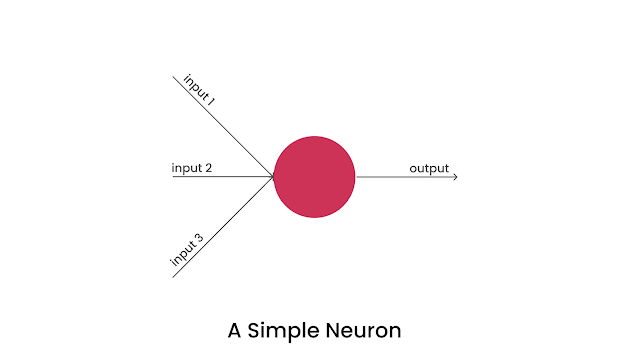

input layer and an output layer. Each node is a neuron that has some inputs

and outputs.

This is the simplest form of a brain. It is also known as perception. It has some inputs, does some processing, and gives an output. Each input is associated with a weight which we will discuss later.

These neurons are used as a building unit to make big neural networks

In this article, we will be making a perceptron in JavaScript.

How does a perceptron work?

Before we move forward to actual coding we must further understand how

the perceptron actually works and how it gives an output. Consider this

perceptron:

As we discussed earlier, each input of a neuron has a weight associated with it. An input of a neuron in most cases is 0 or 1 . and weight is a decimal between 0-1. What a perceptron does is take a weighted sum of all the inputs.

Weighted Sum = W1X1 + W2X2 + W3X3 + ....

This weighted sum is passed on as a single input to the activation function. In simple terms, it decides whether the neuron should be activated or not. Whatever comes out of the activation function is the output of the perceptron.

So the output of the perceptron can be written as:

Output = Activation(W1X1 + W2X2 + W3X3 + ....)

we will take an example to understand all this. Consider an AND gate. It

takes two inputs and only gives an output of 1 if both the inputs are 1.

Now in this case our output is always 0 or 1. So we need a function that

always returns a value of 0 or 1.

So let's take this as our activation function:

f(x) = 0, if x < 0.5

f(x) = 1, if x => 0.5

f(x) = 1, if x => 0.5

This function will always return 0 or 1 for all values of x. The

activation function is chosen depending upon the problem. There are many

types of activation functions but that is out of the scope of this

article.

We are almost there to get an output from the perceptron. just one more

step. we still need to figure out what the weights will be. For this

example, let's take two random decimals between 0 and 1, say

0.5 and

0.7.

Now we can get an output from our example neuron. so let's get

calculating. Let's take 0 and 1 as sample input. i.e. x1 = 0 and x2 =

1.

Therefore,

weighted sum = (0.5)(0) + (0.7)(1) = 0.7

Now let's pass this through the activation function:

output = f(0.7) = 1.

Uh oh! That is wrong. The

answer should be 0. That is because the weights are wrong. The weights of

the perceptron have to be adjusted so that it gives the right output. This

process of adjusting the weights is called Training or Learning. So

naturally, a question arises, how to adjust the weights? I'll answer this

in the next segment.

How to train a perceptron?

There are many ways to train neural networks. We will be learning a

method known as Supervised learning.

Supervised learning is the process in which we give the neural

network a sample input and the correct output. The neural network compares

its output to the correct output and corrects the weights according to the

error. Now let's understand how this all works.

In the earlier example, our perceptron gave an output of 1, but the

output should have been 0. This means error = 1-0 = 1.

Now we can change all the weights of the neuron with this simple

formula:

Note: You have to calculate delta weight individually for all

weights.

ΔW = W * error * learning rate

So new weight = Weight + ΔW

Leaning rate:

We don't want to make such big changes in the weights, otherwise, the

perceptron will not learn anything. To tackle this we will add a learning

rate. It is a small number that we multiply to the final change in

weight.

For our example let's take the

learning rate = 0.01.

That's it, all we need to do is run our train method thousands of times

and over time, the perceptron will make more and more accurate

outputs.

Hope you understood the concepts behind the perceptron. In the next

article, I will explain how to implement all that you have learned in

code.

Comments

Post a Comment